Twitter has a troll problem.

If you’re white, male, not a celebrity and don’t tend to say anything much that’s controversial, then blocking the occasional drive-by troll works perfectly well. If at least one of those things doesn’t apply to you there’s plenty of evidence that Twitter is a little bit broken and better blocking and moderation functionality is needed.

Twitter does have a function to report abuse, but I’m seeing complaints that it’s far too cumbersome, and that has a (possibly deliberate) effect of limiting its use. At least one person has noted that it takes more effort to report an account for abuse than it does for a troll to create yet another throwaway sock-puppet account, a recipe for a perpetual game of whack-a-mole.

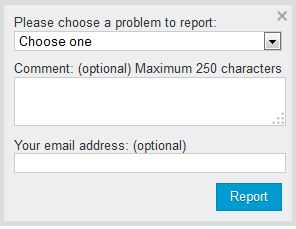

In contrast, here’s the Report Abuse form from The Guardian’s online community. There is no real reason why reporting abuse on Twitter needs to be any more complicated than this.

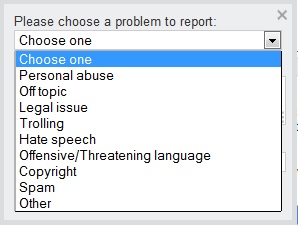

And here’s dropdown listing the reasons. Not all of those would be appropriate for Twitter; “Spam” and “Personal Abuse” certainly are, the others less so.

While I approve of Twitter taking a far tougher line against one-to-one harassment, I am not at all convinced that more generalised speech codes are appropriate for a site on the scale of Twitter. Such things are perfectly acceptable and even expected for smaller community sites where it’s part of the deal when you sign up and reflects the ethos behind the site. Indeed, most such community sites are only as good as their moderation, and there are as many where it’s done badly as those where it’s done well. We can all name sites where either lack of moderation or overly partisan moderation creates a toxic environment.

But for a global site with millions of users the idea of speech codes opens a lot of cans of worms which ultimately boil down to power. Who decides what is and isn’t acceptable speech? Whose community values should they reflect? Who gets to shut down speech they don’t like and who doesn’t? I can’t imagine radical feminists taking kindly to conservative Christians telling them what they can or cannot say on Twitter. Or vice versa.

Better to make it easier for groups of people whose values clash so badly that they cannot coexist in the same space to be able to avoid one another more effectively. Yes, there is a danger of creating echo-chambers; as I’ve said before, if you spend too much time in an echo-chamber, then your bullshit detectors cease to function effectively. But Twitter’s current failure mode is in the other direction; pitchfork-wielding mobs who pile on to anyone who dares to say something they don’t like, overwhelming their conversations.

At the moment, the only moderation tool available to individual users is the block function, which is a bit of a blunt instrument, and is only available retrospectively, once the troll has already invaded your space.

There are other things Twitter could implement if they wanted to:

For a start, now that Twitter has threaded conversations, how about adding the ability to moderate responses to your own posts ? Facebook and Google+ both allow you delete other people’s comments below your own status updates. The equivalent in Twitter would be to allow you to delete other people’s tweets that were @replies to your own. If that’s too much against the spirit of Twitter, which it may well be, at least give the power to sever the link so the offending tweet doesn’t appear as part of the threaded conversation.

Then perhaps there ought to be some limits to who can @reply to you in the first place. I’ve seen one suggestion for a setting that prevents accounts whose age is below a user-specified number of days from appearing in your replies tab, which would filter out newly-created sock-puppet accounts. A filter on follower count would have similar effect; sock-puppets won’t have many friends.

Another idea would be to filter on the number of people you follow who have blocked the account. This won’t be as much use against sock-puppets, but will be effective against persistent trolls who have proved sufficiently annoying or abusive to other people in your network.

All of these are things which Twitter could implement quite easily if the will was there. But instead they seem more interested spending their development effort on Facebook-style algorithmic feeds.

I think few people would deny that Twitter has a troll problem. For us regular users with a few hundred followers it’s easy enough to block the occasional drive-by troll, especially if we’re male. But it’s a different story for public figures, especially women, who can find themselves bombarded with hundreds of abusive messages.

I think few people would deny that Twitter has a troll problem. For us regular users with a few hundred followers it’s easy enough to block the occasional drive-by troll, especially if we’re male. But it’s a different story for public figures, especially women, who can find themselves bombarded with hundreds of abusive messages.